For virtual machines incremental is very fast ( based on QEMU dirty bitmaps, a matter of seconds) .

For LXC containers, it seems that there isn't any incremental implementation...

LVM- Thin storage, backup mode snapshot

1) initial backup

2) subsequent backup, after running git clone https://github.com/torvalds/linux.git

For LXC containers, it seems that there isn't any incremental implementation...

LVM- Thin storage, backup mode snapshot

1) initial backup

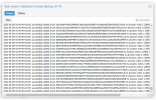

Code:

INFO: starting new backup job: vzdump 104 --node rise1rbx --storage pb --remove 0 --mode snapshot

INFO: Starting Backup of VM 104 (lxc)

INFO: Backup started at 2020-07-16 11:17:39

INFO: status = running

INFO: CT Name: bio

INFO: including mount point rootfs ('/') in backup

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

INFO: creating Proxmox Backup Server archive 'ct/104/2020-07-16T15:17:39Z'

INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=none pct.conf:/var/tmp/vzdumptmp13900/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --skip-lost-and-found --backup-type ct --backup-id 104 --backup-time 1594912659 --repository root@pam@localhost:store2

INFO: Starting backup: ct/104/2020-07-16T15:17:39Z

INFO: Client name: rise1rbx

INFO: Starting protocol: 2020-07-16T11:17:39-04:00

INFO: Upload config file '/var/tmp/vzdumptmp13900/etc/vzdump/pct.conf' to 'BackupRepository { user: Some("root@pam"), host: Some("localhost"), store: "store2" }' as pct.conf.blob

INFO: Upload directory '/mnt/vzsnap0' to 'BackupRepository { user: Some("root@pam"), host: Some("localhost"), store: "store2" }' as root.pxar.didx

INFO: root.pxar.didx: Uploaded 30542429611 bytes as 8531 chunks in 342 seconds (85 MB/s).

INFO: root.pxar.didx: Average chunk size was 3580169 bytes.

INFO: root.pxar.didx: Time per request: 40153 microseconds.

INFO: catalog.pcat1.didx: Uploaded 1104138 bytes as 4 chunks in 342 seconds (0 MB/s).

INFO: catalog.pcat1.didx: Average chunk size was 276034 bytes.

INFO: catalog.pcat1.didx: Time per request: 85639779 microseconds.

INFO: Upload index.json to 'BackupRepository { user: Some("root@pam"), host: Some("localhost"), store: "store2" }'

INFO: Duration: PT342.594309214S

INFO: End Time: 2020-07-16T11:23:22-04:00

INFO: remove vzdump snapshot

Logical volume "snap_vm-104-disk-0_vzdump" successfully removed

INFO: Finished Backup of VM 104 (00:05:44)

INFO: Backup finished at 2020-07-16 11:23:23

INFO: Backup job finished successfully

TASK OK2) subsequent backup, after running git clone https://github.com/torvalds/linux.git

Code:

INFO: starting new backup job: vzdump 104 --node rise1rbx --storage pb --mode snapshot --remove 0

INFO: Starting Backup of VM 104 (lxc)

INFO: Backup started at 2020-07-16 11:30:52

INFO: status = running

INFO: CT Name: bio

INFO: including mount point rootfs ('/') in backup

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "snap_vm-104-disk-0_vzdump" created.

WARNING: Sum of all thin volume sizes (1.66 TiB) exceeds the size of thin pool vmdata/vmstore and the size of whole volume group (343.46 GiB).

INFO: creating Proxmox Backup Server archive 'ct/104/2020-07-16T15:30:52Z'

INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=none pct.conf:/var/tmp/vzdumptmp22961/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --skip-lost-and-found --backup-type ct --backup-id 104 --backup-time 1594913452 --repository root@pam@localhost:store2

INFO: Starting backup: ct/104/2020-07-16T15:30:52Z

INFO: Client name: rise1rbx

INFO: Starting protocol: 2020-07-16T11:31:02-04:00

INFO: Upload config file '/var/tmp/vzdumptmp22961/etc/vzdump/pct.conf' to 'BackupRepository { user: Some("root@pam"), host: Some("localhost"), store: "store2" }' as pct.conf.blob

INFO: Upload directory '/mnt/vzsnap0' to 'BackupRepository { user: Some("root@pam"), host: Some("localhost"), store: "store2" }' as root.pxar.didx

INFO: root.pxar.didx: Uploaded 34743929478 bytes as 9598 chunks in 358 seconds (92 MB/s).

INFO: root.pxar.didx: Average chunk size was 3619913 bytes.

INFO: root.pxar.didx: Time per request: 37400 microseconds.

INFO: catalog.pcat1.didx: Uploaded 2639555 bytes as 8 chunks in 358 seconds (0 MB/s).

INFO: catalog.pcat1.didx: Average chunk size was 329944 bytes.

INFO: catalog.pcat1.didx: Time per request: 44873615 microseconds.

INFO: Upload index.json to 'BackupRepository { user: Some("root@pam"), host: Some("localhost"), store: "store2" }'

INFO: Duration: PT359.011667856S

INFO: End Time: 2020-07-16T11:37:01-04:00

INFO: remove vzdump snapshot

Logical volume "snap_vm-104-disk-0_vzdump" successfully removed

INFO: Finished Backup of VM 104 (00:06:09)

INFO: Backup finished at 2020-07-16 11:37:01

INFO: Backup job finished successfully

TASK OK