One node in cluster is showing up with question mark

- Thread starter Shane Poppleton

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

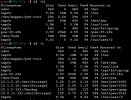

This can happend if that node can't reach some storage, typically a network one. Try to execute a

df -hl and df -h in that server. The first one will only list local filesystems and should run correctly, but the second one may never end if some remote filesystem is unreachable.I did have our QNAP was unresponsive... It is now responsive again and have rebooted this node, but still will not come up in the GUI.This can happend if that node can't reach some storage, typically a network one. Try to execute adf -hlanddf -hin that server. The first one will only list local filesystems and should run correctly, but the second one may never end if some remote filesystem is unreachable.

Okey, maybe Proxmox is looking for some other storage. Please, try using

pvesm status and paste it's output.What about

Can you see the correct node/service status when you use

How does the web interface look like when you access it through

pve-cluster.service? Any error on one of the other nodes?Can you see the correct node/service status when you use

pvesh get /cluster/resources (both on PVE-05 and on another node).How does the web interface look like when you access it through

PVE-05?I have the same issue, when i try and search around for the storage device with issue i get a reply of

vgdisplay

Displays first volumne group.

then

Giving up waiting for lock.

Can't get lock for pve.

Cannot process volume group pve

the volume group having issues should be the volume group PVE (default one)

vgdisplay

Displays first volumne group.

then

Giving up waiting for lock.

Can't get lock for pve.

Cannot process volume group pve

the volume group having issues should be the volume group PVE (default one)

vgdisplay

Displays first volumne group.

then

Giving up waiting for lock.

Can't get lock for pve.

Cannot process volume group pve

Please paste the output of

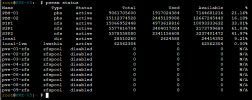

pvesm status in your systemOn other host I get the following

Name Type Status Total Used Available %

local dir active 98559220 12012976 81496696 12.19%

local-lvm lvmthin active 2181685248 643597148 1538088099 29.50%

on the affected host the command currently returns nothing. just sits with a blank return until ^C out.

pretty much any action that involves looking at the volume group seems to time out.

Name Type Status Total Used Available %

local dir active 98559220 12012976 81496696 12.19%

local-lvm lvmthin active 2181685248 643597148 1538088099 29.50%

on the affected host the command currently returns nothing. just sits with a blank return until ^C out.

pretty much any action that involves looking at the volume group seems to time out.

Your case isn't the same as the OP (in that case pvesm status did finish correctly).

Maybe you did some operation on your LV and the lock file is still in use? I would check

Maybe you did some operation on your LV and the lock file is still in use? I would check

lsof | grep "/var/lock/lvm/" to see if any process is holding the lock file for pve LV. It there's any, check if it should/can be killed. Then remove the lock file, which should be /var/lock/lvm/pveHi,

Anything more in the output of

What's your

could you also tryroot@Proxmox03:~# lsof | grep "/var/lock/lvm/"

root@Proxmox03:~#

returns nothing

also looked in the lvm lock folder

root@Proxmox03:/var/lock/lvm# ls

P_global V_pve V_pve:aux

lsof /var/lock/lvm? (Since /var/lock/ is usually a symlink to /run/lock/, the grep command might not match).Anything more in the output of

vgdisplay -ddd (-d for debug)?What's your

pveversion -v?So i noticed over the weekend the locks in seemed to drop so im able to access the VG again, however the machine still shows ? and the tasks are still showing as running but i cant stop them. the VMs are also running as expected.

--- Volume group ---

VG Name pve

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 307

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 28

Open LV 25

Max PV 0

Cur PV 1

Act PV 1

VG Size 2.18 TiB

PE Size 4.00 MiB

Total PE 571549

Alloc PE / Size 567358 / 2.16 TiB

Free PE / Size 4191 / 16.37 GiB

VG UUID wyrDmj-0JH6-XnT3-9qE9-3c1K-Becs-OYz3o4

-Proxmox03:~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-2-pve)

pve-manager: 7.0-10 (running version: 7.0-10/d2f465d3)

pve-kernel-5.11: 7.0-5

pve-kernel-helper: 7.0-5

pve-kernel-5.4: 6.4-4

pve-kernel-5.11.22-2-pve: 5.11.22-3

pve-kernel-5.4.124-1-pve: 5.4.124-1

pve-kernel-5.4.119-1-pve: 5.4.119-1

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph-fuse: 14.2.21-1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.1.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-5

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-9

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-2

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.4-1

proxmox-backup-file-restore: 2.0.4-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.3-4

pve-cluster: 7.0-3

pve-container: 4.0-8

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.2-4

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-2

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-10

smartmontools: 7.2-pve2

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.5-pve1

--- Volume group ---

VG Name pve

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 307

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 28

Open LV 25

Max PV 0

Cur PV 1

Act PV 1

VG Size 2.18 TiB

PE Size 4.00 MiB

Total PE 571549

Alloc PE / Size 567358 / 2.16 TiB

Free PE / Size 4191 / 16.37 GiB

VG UUID wyrDmj-0JH6-XnT3-9qE9-3c1K-Becs-OYz3o4

-Proxmox03:~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-2-pve)

pve-manager: 7.0-10 (running version: 7.0-10/d2f465d3)

pve-kernel-5.11: 7.0-5

pve-kernel-helper: 7.0-5

pve-kernel-5.4: 6.4-4

pve-kernel-5.11.22-2-pve: 5.11.22-3

pve-kernel-5.4.124-1-pve: 5.4.124-1

pve-kernel-5.4.119-1-pve: 5.4.119-1

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph-fuse: 14.2.21-1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.1.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-5

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-9

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-2

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.4-1

proxmox-backup-file-restore: 2.0.4-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.3-4

pve-cluster: 7.0-3

pve-container: 4.0-8

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.2-4

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-2

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-10

smartmontools: 7.2-pve2

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.5-pve1

What is the output ofSo i noticed over the weekend the locks in seemed to drop so im able to access the VG again, however the machine still shows ? and the tasks are still showing as running but i cant stop them. the VMs are also running as expected.

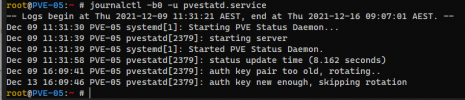

cat /var/log/pve/tasks/active on the node with the stuck tasks? Are there any errors in the output of journalctl -b0 -u pvestatd.service? What about /var/log/syslog?root@Proxmox03:~# cat /var/log/pve/tasks/active

UPID roxmox03:000500C0:1ACAC2E1:61CC82EF:qmstart:25510:root@pam: 0

roxmox03:000500C0:1ACAC2E1:61CC82EF:qmstart:25510:root@pam: 0

UPID roxmox03:00050B18:1ACB80C0:61CC84D5:qmstart:14023:root@pam: 0

roxmox03:00050B18:1ACB80C0:61CC84D5:qmstart:14023:root@pam: 0

UPID roxmox03:0005416E:1AD4D69A:61CC9CBB:imgdel:14023@local-lvm:root@pam: 0

roxmox03:0005416E:1AD4D69A:61CC9CBB:imgdel:14023@local-lvm:root@pam: 0

UPID roxmox03:001D09BE:1E48B34B:61D5736B:aptupdate::root@pam: 1 61D5737B OK

roxmox03:001D09BE:1E48B34B:61D5736B:aptupdate::root@pam: 1 61D5737B OK

there ones with 0 status seem to be the ones that art stuck, can those be manually killed somehow?

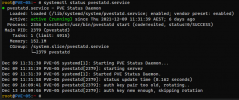

journalctl -b0 -u pvestatd.service

-- Journal begins at Sat 2022-01-01 01:16:05 EST, ends at Wed 2022-01-05 07:55:10 EST. --

-- No entries --

/var/log/syslog has stuff in it but everything is just a loop of the following

Jan 5 07:41:20 Proxmox03 smartd[1930]: Device: /dev/bus/0 [megaraid_disk_00] [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 77 to 76

Jan 5 07:42:00 Proxmox03 systemd[1]: Starting Proxmox VE replication runner...

Jan 5 07:42:01 Proxmox03 systemd[1]: pvesr.service: Succeeded.

Jan 5 07:42:01 Proxmox03 systemd[1]: Finished Proxmox VE replication runner.

Jan 5 07:43:00 Proxmox03 systemd[1]: Starting Proxmox VE replication runner...

UPID

UPID

UPID

UPID

there ones with 0 status seem to be the ones that art stuck, can those be manually killed somehow?

journalctl -b0 -u pvestatd.service

-- Journal begins at Sat 2022-01-01 01:16:05 EST, ends at Wed 2022-01-05 07:55:10 EST. --

-- No entries --

/var/log/syslog has stuff in it but everything is just a loop of the following

Jan 5 07:41:20 Proxmox03 smartd[1930]: Device: /dev/bus/0 [megaraid_disk_00] [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 77 to 76

Jan 5 07:42:00 Proxmox03 systemd[1]: Starting Proxmox VE replication runner...

Jan 5 07:42:01 Proxmox03 systemd[1]: pvesr.service: Succeeded.

Jan 5 07:42:01 Proxmox03 systemd[1]: Finished Proxmox VE replication runner.

Jan 5 07:43:00 Proxmox03 systemd[1]: Starting Proxmox VE replication runner...

The second part of the UPID is the PID in hexadecimal. You can use e.g.root@Proxmox03:~# cat /var/log/pve/tasks/active

UPIDroxmox03:000500C0:1ACAC2E1:61CC82EF:qmstart:25510:root@pam: 0

UPIDroxmox03:00050B18:1ACB80C0:61CC84D5:qmstart:14023:root@pam: 0

UPIDroxmox03:0005416E:1AD4D69A:61CC9CBB:imgdel:14023@local-lvm:root@pam: 0

UPIDroxmox03:001D09BE:1E48B34B:61D5736B:aptupdate::root@pam: 1 61D5737B OK

there ones with 0 status seem to be the ones that art stuck, can those be manually killed somehow?

echo "ibase=16; 000500C0" | bc to convert it to decimal. And I'd check if the processes is actually what you think it is (e.g. with ps --pid 327872) before sending a SIGTERM/SIGKILL.