Thank you for your reply.

I have re-installed three nodes and re-created a cluster.

After re-installation, the three-node cluster works well and the three nodes can see each other.

Similarly, after about one hour, the same issue happens again.

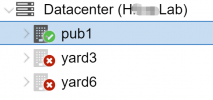

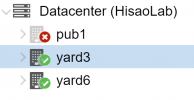

Currently, for this three-node cluster,

pub1 cannot see

yard3 and

yard6, while

yard3 and

yard6 can see each other.

View attachment 30054 View attachment 30055

Info as requested.

On pub1:

Bash:

root@pub1:~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

iface eno113 inet manual

auto vmbr0

iface vmbr0 inet static

address 10.20.xx.xx/17

gateway 10.20.xxx.xxx

bridge-ports eno113

bridge-stp off

bridge-fd 0

iface eno114 inet manual

iface eno115 inet manual

iface eno116 inet manual

root@pub1:~# cat /etc/pve/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: pub1

nodeid: 2

quorum_votes: 1

ring0_addr: 10.20.xx.xxx

}

node {

name: yard3

nodeid: 1

quorum_votes: 1

ring0_addr: 10.16.xx.x

}

node {

name: yard6

nodeid: 3

quorum_votes: 1

ring0_addr: 10.16.xx.xx

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: HxxxLab

config_version: 3

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}

root@pub1:~# cat /etc/hosts

127.0.0.1 localhost.localdomain localhost

10.20.xx.xxx pub1.xxx.edu.cn pub1

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts

root@pub1:~# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

2 1 pub1 (local)

On yard3:

Bash:

root@yard3:~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

iface enp68s0f0 inet manual

auto vmbr0

iface vmbr0 inet static

address 10.16.xx.x/17

gateway 10.16.xxx.xxx

bridge-ports enp68s0f0

bridge-stp off

bridge-fd 0

iface enp193s0f0 inet manual

iface enp68s0f1 inet manual

iface enp193s0f1 inet manual

root@yard3:~# cat /etc/pve/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: pub1

nodeid: 2

quorum_votes: 1

ring0_addr: 10.20.xx.xxx

}

node {

name: yard3

nodeid: 1

quorum_votes: 1

ring0_addr: 10.16.xx.x

}

node {

name: yard6

nodeid: 3

quorum_votes: 1

ring0_addr: 10.16.xx.xx

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: HxxxLab

config_version: 3

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}

root@yard3:~# cat /etc/hosts

127.0.0.1 localhost.localdomain localhost

10.16.xx.x yard3.xxx.edu.cn yard3

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts

root@yard3:~# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 yard3 (local)

3 1 yard6