I have a three-node PVE 6.3 cluster running on three nearly-identical (identical except for RAM--two nodes have 48 GB; the third has 96 GB) blades of a Dell PowerEdge C6100, each with 2x Xeon X5650 CPUs, and each with a Chelsio T420-CR 2x 10Gbit NIC. Each pretty consistently fails to bring up either interface on boot.

/etc/network/interfaces is identical among the three systems except for IP addresses:

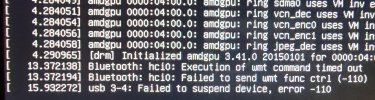

dmesg reports missing firmware--Proxmox for some reason have removed this from their packages (it's in the original Debian packages), but it doesn't seem to prevent the interface from coming up manually:

systemctl restart networking fails with an error; ifup vmbr0 reports that another instance of ifup is already running. But I noticed the last time this happened (earlier today), that there were lots (i.e., over 50) of systemd-udevd processes running. That struck me as abnormal, so I ran systemctl restart systemd-udevd. This succeeded, though it took over a minute to do so. Once it did, I ran systemctl restart networking, and it succeeded in under a second, both interfaces came up, and the system was online./etc/network/interfaces is identical among the three systems except for IP addresses:

Code:

root@pve1:~# cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

iface enp3s0f4 inet manual

auto enp3s0f4d1

iface enp3s0f4d1 inet static

address 192.168.5.101/24

iface eno1 inet manual

iface eno2 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.1.3/24

gateway 192.168.1.1

bridge-ports enp3s0f4

bridge-stp off

bridge-fd 0dmesg reports missing firmware--Proxmox for some reason have removed this from their packages (it's in the original Debian packages), but it doesn't seem to prevent the interface from coming up manually:

Code:

root@pve1:~# dmesg | grep cxgb

[ 2.869044] cxgb4 0000:03:00.4: Direct firmware load for cxgb4/t4fw.bin failed with error -2

[ 2.869050] cxgb4 0000:03:00.4: unable to load firmware image cxgb4/t4fw.bin, error -2

[ 2.869377] cxgb4 0000:03:00.4: Coming up as MASTER: Initializing adapter

[ 3.588928] cxgb4 0000:03:00.4: Direct firmware load for cxgb4/t4-config.txt failed with error -2

[ 3.600882] cxgb4 0000:03:00.4: Hash filter with ofld is not supported by FW

[ 4.352902] cxgb4 0000:03:00.4: Successfully configured using Firmware Configuration File "Firmware Default", version 0x0, computed checksum 0x0

[ 4.560904] cxgb4 0000:03:00.4: max_ordird_qp 255 max_ird_adapter 589824

[ 4.608903] cxgb4 0000:03:00.4: Failed to read filter mode/mask via fw api, using indirect-reg-read

[ 4.707555] cxgb4 0000:03:00.4: 98 MSI-X vectors allocated, nic 16 per uld 16

[ 4.707565] cxgb4 0000:03:00.4: 32.000 Gb/s available PCIe bandwidth (5 GT/s x8 link)

[ 4.742324] cxgb4 0000:03:00.4 eth0: eth0: Chelsio T420-CR (0000:03:00.4) 1G/10GBASE-SFP

[ 4.742685] cxgb4 0000:03:00.4 eth1: eth1: Chelsio T420-CR (0000:03:00.4) 1G/10GBASE-SFP

[ 4.742788] cxgb4 0000:03:00.4: Chelsio T420-CR rev 2

[ 4.742790] cxgb4 0000:03:00.4: S/N: PT36121180, P/N: 110112040F0

[ 4.742793] cxgb4 0000:03:00.4: Firmware version: 1.16.63.0

[ 4.742795] cxgb4 0000:03:00.4: Bootstrap version: 255.255.255.255

[ 4.742797] cxgb4 0000:03:00.4: TP Microcode version: 0.1.9.4

[ 4.742798] cxgb4 0000:03:00.4: No Expansion ROM loaded

[ 4.742800] cxgb4 0000:03:00.4: Serial Configuration version: 0x4271203

[ 4.742802] cxgb4 0000:03:00.4: VPD version: 0x1

[ 4.742804] cxgb4 0000:03:00.4: Configuration: RNIC MSI-X, Offload capable

[ 4.744883] cxgb4 0000:03:00.4 enp3s0f4d1: renamed from eth1

[ 4.765240] cxgb4 0000:03:00.4 enp3s0f4: renamed from eth0

[ 784.532945] cxgb4 0000:03:00.4 enp3s0f4d1: SR module inserted

[ 784.736967] cxgb4 0000:03:00.4 enp3s0f4: SR module inserted

[ 785.108039] cxgb4 0000:03:00.4: Interface enp3s0f4d1 is running DCBx-IEEE

[ 785.108068] cxgb4 0000:03:00.4 enp3s0f4d1: link up, 10Gbps, full-duplex, Tx/Rx PAUSE

[ 785.307924] cxgb4 0000:03:00.4: Interface enp3s0f4 is running DCBx-IEEE

[ 785.307938] cxgb4 0000:03:00.4 enp3s0f4: link up, 10Gbps, full-duplex, Tx/Rx PAUSE

[ 787.206943] cxgb4 0000:03:00.4: Port 0 link down, reason: Link Down

[ 787.206967] cxgb4 0000:03:00.4 enp3s0f4: link down

[ 787.806610] cxgb4 0000:03:00.4: Interface enp3s0f4 is running DCBx-IEEE

[ 787.806634] cxgb4 0000:03:00.4 enp3s0f4: link up, 10Gbps, full-duplex, Tx/Rx PAUSE

[ 787.906568] cxgb4 0000:03:00.4: Port 0 link down, reason: Link Down

[ 787.906586] cxgb4 0000:03:00.4 enp3s0f4: link down

[ 788.406302] cxgb4 0000:03:00.4: Interface enp3s0f4 is running DCBx-IEEE

[ 788.406325] cxgb4 0000:03:00.4 enp3s0f4: link up, 10Gbps, full-duplex, Tx/Rx PAUSE