Header

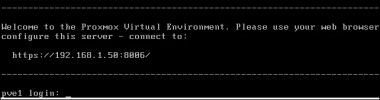

Proxmox

Virtual Environment 7.2-3

Node 'pve'

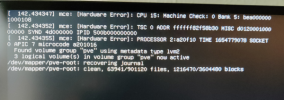

Jun 09 13:53:45 pve kernel: amdgpu 0000:08:00.0: amdgpu: VRAM: 8176M 0x0000008000000000 - 0x00000081FEFFFFFF (8176M used)

Jun 09 13:53:45 pve kernel: amdgpu 0000:08:00.0: amdgpu: GART: 512M 0x0000000000000000 - 0x000000001FFFFFFF

Jun 09 13:53:45 pve kernel: amdgpu 0000:08:00.0: amdgpu: AGP: 267894784M 0x0000008400000000 - 0x0000FFFFFFFFFFFF

Jun 09 13:53:45 pve kernel: [drm] Detected VRAM RAM=8176M, BAR=8192M

Jun 09 13:53:45 pve kernel: [drm] RAM width 128bits GDDR6

Jun 09 13:53:45 pve kernel: [drm] amdgpu: 8176M of VRAM memory ready

Jun 09 13:53:45 pve kernel: [drm] amdgpu: 8176M of GTT memory ready.

Jun 09 13:53:45 pve kernel: [drm] GART: num cpu pages 131072, num gpu pages 131072

Jun 09 13:53:45 pve kernel: [drm] PCIE GART of 512M enabled (table at 0x0000008000300000).

Jun 09 13:53:45 pve kernel: nouveau 0000:03:00.0: DRM: allocated 1920x1200 fb: 0x50000, bo 00000000a53d776e

Jun 09 13:53:45 pve kernel: Console: switching to colour frame buffer device 240x75

Jun 09 13:53:45 pve kernel: nouveau 0000:03:00.0: [drm] fb0: nouveaudrmfb frame buffer device

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: Will use PSP to load VCN firmware

Jun 09 13:53:48 pve kernel: [drm] reserve 0xa00000 from 0x81fe000000 for PSP TMR

Jun 09 13:53:48 pve kernel: [drm] Initialized nouveau 1.3.1 20120801 for 0000:03:00.0 on minor 0

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: RAS: optional ras ta ucode is not available

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: SECUREDISPLAY: securedisplay ta ucode is not available

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: smu driver if version = 0x0000000f, smu fw if version = 0x00000013, smu fw version = 0x003b2800 (59.40.0)

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: SMU driver if version not matched

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: use vbios provided pptable

Jun 09 13:53:48 pve kernel: amdgpu 0000:08:00.0: amdgpu: SMU is initialized successfully!

Jun 09 13:53:48 pve kernel: [drm] Display Core initialized with v3.2.149!

Jun 09 13:53:48 pve kernel: [drm] DMUB hardware initialized: version=0x0202000C

Jun 09 13:53:48 pve kernel: [drm] REG_WAIT timeout 1us * 100000 tries - mpc2_assert_idle_mpcc line:479

Jun 09 13:53:48 pve kernel: snd_hda_intel 0000:08:00.1: bound 0000:08:00.0 (ops amdgpu_dm_audio_component_bind_ops [amdgpu])

Jun 09 13:53:49 pve kernel: kfd kfd: amdgpu: Allocated 3969056 bytes on gart

Jun 09 13:53:49 pve kernel: memmap_init_zone_device initialised 2097152 pages in 8ms

Jun 09 13:53:49 pve kernel: amdgpu: HMM registered 8176MB device memory

Jun 09 13:53:49 pve kernel: amdgpu: SRAT table not found

Jun 09 13:53:49 pve kernel: amdgpu: Virtual CRAT table created for GPU

Jun 09 13:53:49 pve kernel: amdgpu: Topology: Add dGPU node [0x73ff:0x1002]

Jun 09 13:53:49 pve kernel: kfd kfd: amdgpu: added device 1002:73ff

Jun 09 13:53:49 pve kernel: amdgpu 0000:08:00.0: amdgpu: SE 2, SH per SE 2, CU per SH 8, active_cu_number 32

Jun 09 13:53:49 pve kernel: [drm] fb mappable at 0x7C004CF000

Jun 09 13:53:49 pve kernel: [drm] vram apper at 0x7C00000000

Jun 09 13:53:49 pve kernel: [drm] size 14745600

Jun 09 13:53:49 pve kernel: [drm] fb depth is 24

Jun 09 13:53:49 pve kernel: [drm] pitch is 10240

Jun 09 13:53:49 pve kernel: fbcon: amdgpudrmfb (fb1) is primary device

Jun 09 13:53:49 pve kernel: fbcon: Remapping primary device, fb1, to tty 1-63

Jun 09 13:53:49 pve kernel: [drm] REG_WAIT timeout 1us * 100000 tries - mpc2_assert_idle_mpcc line:479

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: [drm] fb1: amdgpudrmfb frame buffer device

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring gfx_0.0.0 uses VM inv eng 0 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.0.0 uses VM inv eng 1 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.1.0 uses VM inv eng 4 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.2.0 uses VM inv eng 5 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.3.0 uses VM inv eng 6 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.0.1 uses VM inv eng 7 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.1.1 uses VM inv eng 8 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.2.1 uses VM inv eng 9 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring comp_1.3.1 uses VM inv eng 10 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring kiq_2.1.0 uses VM inv eng 11 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring sdma0 uses VM inv eng 12 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring sdma1 uses VM inv eng 13 on hub 0

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring vcn_dec_0 uses VM inv eng 0 on hub 1

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring vcn_enc_0.0 uses VM inv eng 1 on hub 1

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring vcn_enc_0.1 uses VM inv eng 4 on hub 1

Jun 09 13:53:50 pve kernel: amdgpu 0000:08:00.0: amdgpu: ring jpeg_dec uses VM inv eng 5 on hub 1

Jun 09 13:53:50 pve kernel: [drm] Initialized amdgpu 3.42.0 20150101 for 0000:08:00.0 on minor 1

Jun 09 13:53:51 pve systemd[1]: Started Getty on tty1.

Jun 09 13:53:51 pve systemd[1]: Reached target Login Prompts.

Jun 09 14:02:05 pve pvedaemon[1510]: <root@pam> starting task UPID

ve:00000AEF:0000FCD7:62A1EF4D:qmstart:100:root@pam:

Jun 09 14:02:05 pve pvedaemon[2799]: start VM 100: UPID

ve:00000AEF:0000FCD7:62A1EF4D:qmstart:100:root@pam:

Jun 09 14:02:05 pve kernel: VFIO - User Level meta-driver version: 0.3

Jun 09 14:02:05 pve kernel: amdgpu 0000:08:00.0: amdgpu: amdgpu: finishing device.

Jun 09 14:02:05 pve kernel: Console: switching to colour dummy device 80x25

Jun 09 14:02:09 pve kernel: amdgpu: cp queue pipe 4 queue 0 preemption failed

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: amdgpu: Fail to disable thermal alert!

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa9900 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa9920 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa9940 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa9960 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa9980 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa99a0 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa99c0 flags=0x0020]

Jun 09 14:02:09 pve kernel: amdgpu 0000:08:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x0012 address=0xfffa99e0 flags=0x0020]

Jun 09 14:02:09 pve kernel: [drm] free PSP TMR buffer

Jun 09 14:02:09 pve kernel: [drm] amdgpu: ttm finalized

Jun 09 14:02:09 pve kernel: vfio-pci 0000:08:00.0: vgaarb: changed VGA decodes: olddecodes=io+mem,decodes=io+mem

wns=none

Jun 09 14:02:09 pve systemd[1]: Created slice qemu.slice.

Jun 09 14:02:09 pve systemd[1]: Started 100.scope.

Jun 09 14:02:11 pve kernel: vfio-pci 0000:08:00.0: vfio_ecap_init: hiding ecap 0x19@0x270

Jun 09 14:02:11 pve kernel: vfio-pci 0000:08:00.0: vfio_ecap_init: hiding ecap 0x1b@0x2d0

Jun 09 14:02:11 pve kernel: vfio-pci 0000:08:00.0: vfio_ecap_init: hiding ecap 0x26@0x410

Jun 09 14:02:11 pve kernel: vfio-pci 0000:08:00.0: vfio_ecap_init: hiding ecap 0x27@0x440

Jun 09 14:02:12 pve pvedaemon[1510]: <root@pam> end task UPID

ve:00000AEF:0000FCD7:62A1EF4D:qmstart:100:root@pam: OK

Jun 09 14:02:23 pve kernel: usb 3-1: reset low-speed USB device number 2 using xhci_hcd

Jun 09 14:02:24 pve kernel: usb 1-10: reset full-speed USB device number 4 using xhci_hcd

Jun 09 14:02:25 pve pvedaemon[2990]: starting vnc proxy UPID

ve:00000BAE:000104C1:62A1EF61:vncproxy:100:root@pam:

Jun 09 14:02:25 pve pvedaemon[1510]: <root@pam> starting task UPID

ve:00000BAE:000104C1:62A1EF61:vncproxy:100:root@pam:

Jun 09 14:02:26 pve qm[2995]: VM 100 qmp command failed - VM 100 qmp command 'set_password' failed - Could not set password

Jun 09 14:02:26 pve pvedaemon[2990]: Failed to run vncproxy.

Jun 09 14:02:26 pve pvedaemon[1510]: <root@pam> end task UPID

ve:00000BAE:000104C1:62A1EF61:vncproxy:100:root@pam: Failed to run vncproxy.

Jun 09 14:06:28 pve systemd[1]: Starting Cleanup of Temporary Directories...

Jun 09 14:06:28 pve systemd[1]: systemd-tmpfiles-clean.service: Succeeded.

Jun 09 14:06:28 pve systemd[1]: Finished Cleanup of Temporary Directories.

Jun 09 14:13:01 pve pvedaemon[1511]: <root@pam> successful auth for user 'root@pam'

Jun 09 14:17:01 pve CRON[5170]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Jun 09 14:17:01 pve CRON[5171]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Jun 09 14:17:01 pve CRON[5170]: pam_unix(cron:session): session closed for user root

Jun 09 14:17:48 pve pveproxy[1521]: worker exit

Jun 09 14:17:48 pve pveproxy[1518]: worker 1521 finished

Jun 09 14:17:48 pve pveproxy[1518]: starting 1 worker(s)

Jun 09 14:17:48 pve pveproxy[1518]: worker 5289 started

Jun 09 14:18:59 pve pvedaemon[5466]: starting vnc proxy UPID

ve:0000155A:000288FB:62A1F343:vncproxy:100:root@pam:

Jun 09 14:18:59 pve pvedaemon[1511]: <root@pam> starting task UPID

ve:0000155A:000288FB:62A1F343:vncproxy:100:root@pam:

Jun 09 14:18:59 pve qm[5468]: VM 100 qmp command failed - VM 100 qmp command 'set_password' failed - Could not set password

Jun 09 14:18:59 pve pvedaemon[5466]: Failed to run vncproxy.

Jun 09 14:18:59 pve pvedaemon[1511]: <root@pam> end task UPID

ve:0000155A:000288FB:62A1F343:vncproxy:100:root@pam: Failed to run vncproxy.

Logs