Attempting to halt an OpenMediaVault 5 guest running on Proxmox 7, but the guest vm seems to hang after issuing halt directly on the guest.

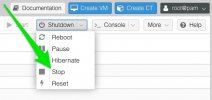

When on PVE I see,

Clearly it's still being reported as running.

I installed

Screenshot taken a minute or two after halt issued and with the shell entirely absent and unresponsive - cannot even get sshd to respond:

Left the system like this for quite a while and there was no change, except oddly after a long time the "CPU usage" went up from around 0.038 to 0.23...

Network traffic and disk I/O went to and stayed at zero.

I did "stop" on it from PVE and it went on down. It also restarted without any problem.

What do you all think about this? This is really problematic when you are shutting down PVE and have to wait a very, very, very long time for the system to end up killing the guest(s) that have this problem.

When on PVE I see,

qm list:

Code:

VMID NAME STATUS MEM(MB) BOOTDISK(GB) PID

111 OpenMediaVault running 24000 32.00 48090Clearly it's still being reported as running.

I installed

qemu-guest-agent and the behavior didn't change, which was a big surprise to me.Screenshot taken a minute or two after halt issued and with the shell entirely absent and unresponsive - cannot even get sshd to respond:

Left the system like this for quite a while and there was no change, except oddly after a long time the "CPU usage" went up from around 0.038 to 0.23...

Network traffic and disk I/O went to and stayed at zero.

I did "stop" on it from PVE and it went on down. It also restarted without any problem.

What do you all think about this? This is really problematic when you are shutting down PVE and have to wait a very, very, very long time for the system to end up killing the guest(s) that have this problem.