Please I need some inside info, ASAP if possible.

Sorry if it has been written elsewhere but my anxiety cant let me think straight right now. After upgrading from 6.4.13 to 7.0.10 and keeping copies of my files

/etc/kernel/cmdline

/etc/default/grub

/etc/issue

/etc/modules (in case someone has passthrough mods)

/etc/ssh/ssh_config

/etc/ssh/sshd_config

ip address before and after (there it is a mesh)

cant login to proxmox (I have access to the cli though via the machine it self) and it doesnt have net access of course...probably by a picture I ve taken during boot

Failed to start Import ZFS pool HHproxData (see systemctl status zfs-import@HHproxData.service for details)

I tried loading at start both 5.11.22-2-pve and 5.4.124-1-pve with the same results of course no gui access and no net

What I ve checked so far (many irrelevant but then again I dint expect to have issues since 3 machines went smooth) on the specific one that is meant to replace the main server in a production level, I had every VM , connections , etc setup and after update cant do anything. The proxmox access is based on vmbr0 which is based on bond0 and bon0 is based on ports enp7s0 enp0s25. It had been configured in both sides proxmox-switch the specific ports as 802.3ad.

Below paths are the same

/etc/modules

/etc/issue

Below paths changed in some lines or not in a mandatory way, so I need to ask

/etc/kernel/cmdline

Even though I have in all other machines different name for the node, here before and after upgrade has the name pve-1 . Does here needs the name of the proxmox server I gave it during installation?

/etc/default/grub

Here changed the lines

from GRUB_DISTRIBUTOR="Proxmox Virtual Environment" to GRUB_DISTRIBUTOR="lsb_release -i -s 2s /dev/null || echo debian" Do I change it back?

line GRUB_DISABLE_OS_PROBER=true doesnt exist anymore. Do I switch it back?

line GRUB_DISABLE_RECOVERY="true" now is begin commented. Do I revert it back?

/etc/ssh/ssh_config

now includes the line Include/etc/ssh/ssh.config.d/*.conf It is probably there in order to take into consideration other custom files as well but shall I comment this out?

/etc/ssh/sshd_config

also now includes the line Include/etc/ssh/ssh.config.d/*.conf It is probably there in order to take into consideration other custom files as well but shall I comment this out?

Finally the ip address before and after (I ll post only the after lines, since in <<<<my_comments>>>> you can see what it has been altered)

As you can see above, the ports name stayed the same, but in some ports the mac address changed in some bonds and vmbrs as well. In all bonds and vmbrs i have the message NO CARRIER and LOWER_UP doesnt exist anymore any some more which you can find by looking at the <<<<.........>>>>> messages of mine.

New Edit.... here is what the path /etc/network/interfaces looks like now

I have 4+ hours trying, first to have access and then see what happened with that Failed to start Import ZFS pool HHproxData message which is also crucial.

Thank you in advance for any thoughts, guidance.

PS -Probably I should keep a copy of /etc/network/interfaces as well but now it is late for that

-During upgrade because I knew it wouldn t cause any problems (based on the 3 machines I did previously) I answered not to keep the current files but the maintainer's ones. So the answer was yes to all the 4-5 questions during the upgrade

-Do I need to change the mac addresses of those ports, bonds, vmbrs back to what it was..... is there a cli way (of course if there is, only a cli way would be).

Sorry if it has been written elsewhere but my anxiety cant let me think straight right now. After upgrading from 6.4.13 to 7.0.10 and keeping copies of my files

/etc/kernel/cmdline

/etc/default/grub

/etc/issue

/etc/modules (in case someone has passthrough mods)

/etc/ssh/ssh_config

/etc/ssh/sshd_config

ip address before and after (there it is a mesh)

cant login to proxmox (I have access to the cli though via the machine it self) and it doesnt have net access of course...probably by a picture I ve taken during boot

Failed to start Import ZFS pool HHproxData (see systemctl status zfs-import@HHproxData.service for details)

I tried loading at start both 5.11.22-2-pve and 5.4.124-1-pve with the same results of course no gui access and no net

What I ve checked so far (many irrelevant but then again I dint expect to have issues since 3 machines went smooth) on the specific one that is meant to replace the main server in a production level, I had every VM , connections , etc setup and after update cant do anything. The proxmox access is based on vmbr0 which is based on bond0 and bon0 is based on ports enp7s0 enp0s25. It had been configured in both sides proxmox-switch the specific ports as 802.3ad.

Below paths are the same

/etc/modules

/etc/issue

Below paths changed in some lines or not in a mandatory way, so I need to ask

/etc/kernel/cmdline

root=ZFS=rpool/ROOT/pve-1 boot=zfsEven though I have in all other machines different name for the node, here before and after upgrade has the name pve-1 . Does here needs the name of the proxmox server I gave it during installation?

/etc/default/grub

Here changed the lines

from GRUB_DISTRIBUTOR="Proxmox Virtual Environment" to GRUB_DISTRIBUTOR="lsb_release -i -s 2s /dev/null || echo debian" Do I change it back?

line GRUB_DISABLE_OS_PROBER=true doesnt exist anymore. Do I switch it back?

line GRUB_DISABLE_RECOVERY="true" now is begin commented. Do I revert it back?

/etc/ssh/ssh_config

now includes the line Include/etc/ssh/ssh.config.d/*.conf It is probably there in order to take into consideration other custom files as well but shall I comment this out?

/etc/ssh/sshd_config

also now includes the line Include/etc/ssh/ssh.config.d/*.conf It is probably there in order to take into consideration other custom files as well but shall I comment this out?

Finally the ip address before and after (I ll post only the after lines, since in <<<<my_comments>>>> you can see what it has been altered)

[/COLOR]

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp7s0: <BROADCAST,MULTICAST <<<<SLAVE is missing>>>>,UP,LOWER_UP> mtu 1500 qdisc mq <<<<master is missing>>>> <<<<bond0 is missing>>>> state UP group default qlen 1000

link/ether f8:b1:56:d1:25:cf <<<<now became f8:b1:56:d1:26:28>>>> brd ff:ff:ff:ff:ff:ff

<<<<inet6 fe80::fab1:56ff:fed1:2628/64 scope link now exists this line>>>>

<<<<valid_lft forever preferred_lft forever now exists this line>>>>

3: enp0s25: <BROADCAST,MULTICAST,<<<<SLAVE is missing>>>>,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast <<<<master bond0 not exists>>>> state UP group default qlen 1000

link/ether f8:b1:56:d1:25:cf brd ff:ff:ff:ff:ff:ff

<<<<inet6 fe80::fab1:56ff:fed1:25cf/64 scope link now exists this line>>>>

<<<<valid_lft forever preferred_lft forever now exists this line>>>>

4: enp131s0f0: <BROADCAST,MULTICAST,<<<<SLAVE is missing>>>>,UP,LOWER_UP> mtu 1500 qdisc mq <<<<master bond1 not exists>>>> state UP group default qlen 1000

link/ether 00:1b:21:25:fb:98 brd ff:ff:ff:ff:ff:ff

<<<<inet6 fe80::21b:21ff:fe25:fb98/64 scope link now exists this line>>>>

<<<<valid_lft forever preferred_lft forever now exists this line>>>>

5: enp131s0f1: <BROADCAST,MULTICAST,<<<<SLAVE is missing>>>>,UP,LOWER_UP> mtu 1500 qdisc mq <<<<master bond1 not exists>>>> state UP group default qlen 1000

link/ether 00:1b:21:25:fb:99 <<<<before it was 00:1b:21:25:fb:98>>>> brd ff:ff:ff:ff:ff:ff

<<<<inet6 fe80::21b:21ff:fe25:fb99/64 scope link now exists this line>>>>

<<<<valid_lft forever preferred_lft forever now exists this line>>>>

6: enp132s0f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq <<<<master bond2 not exists>>>> state UP group default qlen 1000

link/ether 00:1b:21:25:fb:99 <<<<before ti was 00:1b:21:25:fb:9c>>>> brd ff:ff:ff:ff:ff:ff

<<<<inet6 fe80:21b:21ff:fe25:fb9c/64 scope link now exists this line>>>>

<<<<valid_lft forever preferred_lft forever now exists this line>>>>

7: enp132s0f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq <<<<master bond2 not exists>>>> state UP group default qlen 1000

link/ether 00:1b:21:25:fb:9d <<<<before it was 00:1b:21:25:fb:9c>>>> brd ff:ff:ff:ff:ff:ff

<<<<inet6 fe80:21b:21ff:fe25:fb9d/64 scope link now exists this line>>>>

<<<<valid_lft forever preferred_lft forever now exists this line>>>>

8: bond0: <<<<<NO CARRIER exists now>>>>,BROADCAST,MULTICAST,MASTER,UP,<<<<LOWER_UP doesnt exist anymore>>>>> mtu 1500 qdisc noqueue master vmbr0 state <<<<UP now it is DOWN>>>> group default qlen 1000

link/ether 1e:b7:df:4e:4a:f0 brd ff:ff:ff:ff:ff:ff <<<before it was f8:b1:56:d1:25:cf brd ff:ff:ff:ff:ff:ff>>>>

9: bond1: <<<<NO CARRIER exists now>>>>,BROADCAST,MULTICAST,MASTER,UP,<<<<LOWER_UP doesnt exist anymore>>>> mtu 1500 qdisc noqueue master vmbr1 state <<<<UP now it is DOWN>>>> group default qlen 1000

link/ether 8a:81:b0:36:c4:82 brd ff:ff:ff:ff:ff:ff <<<<before it was 00:1b:21:25:fb:98 brd ff:ff:ff:ff:ff:ff>>>>

10: bond2: <<<<NO CARRIER exists now>>>>,BROADCAST,MULTICAST,MASTER,UP,LOWER_UP,<<<<LOWER_UP doesnt exist anymore>>>> mtu 1500 qdisc noqueue master vmbr2 state <<<<UP now it is DOWN>>>> group default qlen 1000

link/ether d2:0b:48:25:8e:eb brd ff:ff:ff:ff:ff:ff <<<<before it was 00:1b:21:25:fb:9c brd ff:ff:ff:ff:ff:ff>>>>

11: vmbr0: <<<<NO CARRIER exists now>>>>,BROADCAST,MULTICAST,UP,LOWER_UP,<<<<LOWER_UP doesnt exist anymore>>>>> mtu 1500 qdisc noqueue state <<<<UP now it is DOWN>>>> group default qlen 1000

link/ether 16:fc:21:59:fa:81 brd ff:ff:ff:ff:ff:ff <<<<before it was f8:b1:56:d1:25:cf brd ff:ff:ff:ff:ff:ff>>>>

inet 192.168.1.201/24 brd 192.168.1.255 scope global vmbr0

valid_lft forever preferred_lft forever

<<<<inet6 fe80::fab1:56ff:fed1:25cf/64 scope link now exists >>>>

<<<<valid_lft forever preferred_lft forever now exists >>>>

12: vmbr1: <<<<NO CARRIER exists now>>>>,BROADCAST,MULTICAST,UP,LOWER_UP,<<<<LOWER_UP doesnt exist anymore>>>>> mtu 1500 qdisc noqueue state <<<<UP now it is DOWN>>>> group default qlen 1000

link/ether fa:33:04:ca:e5:a9 brd ff:ff:ff:ff:ff:ff <<<<before it was 00:1b:21:25:fb:98 brd ff:ff:ff:ff:ff:ff>>>>

<<<<inet6 fe80::21b:21ff:fe25:fb98/64 scope link not exist anymore>>>>

<<<<valid_lft forever preferred_lft forever not exists anymore>>>>

13: vmbr2: <<<<NO CARRIER exists now>>>>,BROADCAST,MULTICAST,UP,LOWER_UP,<<<<LOWER_UP doesnt exist anymore>>>>> mtu 1500 qdisc noqueue state <<<<UP now it is DOWN>>>> group default qlen 1000

link/ether 22:2f:dd:ec:5a:70 brd ff:ff:ff:ff:ff:ff <<<< before it was 00:1b:21:25:fb:9c brd ff:ff:ff:ff:ff:ff>>>>

<<<<inet6 <<<<fe80::21b:21ff:fe25:fb9c/64 scope link not exists anymore>>>>

<<<<valid_lft forever preferred_lft forever not exists anymore>>>>

<<<<All below lines doesnt exist anymore>>>>

14: tap101i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr101i0 state UNKNOWN group default qlen 1000

link/ether b2:78:b3:22:d8:de brd ff:ff:ff:ff:ff:ff

15: fwbr101i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 06:8b:6e:d2:62:db brd ff:ff:ff:ff:ff:ff

16: fwpr101p0@fwln101i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr2 state UP group default qlen 1000

link/ether e2:b4:85:1d:42:c1 brd ff:ff:ff:ff:ff:ff

17: fwln101i0@fwpr101p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr101i0 state UP group default qlen 1000

link/ether 06:8b:6e:d2:62:db brd ff:ff:ff:ff:ff:ff

[COLOR=rgb(0, 0, 0)]As you can see above, the ports name stayed the same, but in some ports the mac address changed in some bonds and vmbrs as well. In all bonds and vmbrs i have the message NO CARRIER and LOWER_UP doesnt exist anymore any some more which you can find by looking at the <<<<.........>>>>> messages of mine.

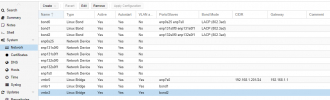

New Edit.... here is what the path /etc/network/interfaces looks like now

I have 4+ hours trying, first to have access and then see what happened with that Failed to start Import ZFS pool HHproxData message which is also crucial.

Thank you in advance for any thoughts, guidance.

PS -Probably I should keep a copy of /etc/network/interfaces as well but now it is late for that

-During upgrade because I knew it wouldn t cause any problems (based on the 3 machines I did previously) I answered not to keep the current files but the maintainer's ones. So the answer was yes to all the 4-5 questions during the upgrade

-Do I need to change the mac addresses of those ports, bonds, vmbrs back to what it was..... is there a cli way (of course if there is, only a cli way would be).

Last edited: