Hello,

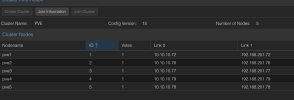

I deleted nodes from my Proxmox cluster following this doc. they are no longer members of the cluster but they are still visible in the HA menu of the GUI:

Did I forget to do something?

Thank you in advance for your help.

I deleted nodes from my Proxmox cluster following this doc. they are no longer members of the cluster but they are still visible in the HA menu of the GUI:

cat /etc/pve/.members

{

"nodename": "galaxie5",

"version": 60,

"cluster": { "name": "galaxie", "version": 89, "nodes": 10, "quorate": 1 },

"nodelist": {

"galaxie5": { "id": 5, "online": 1, "ip": "147.215.130.105"},

"galaxie6": { "id": 6, "online": 1, "ip": "147.215.130.106"},

"galaxie7": { "id": 7, "online": 1, "ip": "147.215.130.107"},

"galaxie9": { "id": 9, "online": 1, "ip": "147.215.130.109"},

"galaxie15": { "id": 15, "online": 1, "ip": "147.215.130.115"},

"galaxie21": { "id": 21, "online": 1, "ip": "147.215.130.121"},

"galaxie22": { "id": 22, "online": 1, "ip": "147.215.130.122"},

"galaxie23": { "id": 23, "online": 1, "ip": "147.215.130.123"},

"galaxie27": { "id": 24, "online": 1, "ip": "147.215.130.127"},

"galaxie25": { "id": 25, "online": 1, "ip": "147.215.130.125"}

}

}

Did I forget to do something?

Thank you in advance for your help.