Recently I put new drives into a Proxmox cluster with Ceph, when I create the new OSD, the process hangs and keep in creating for a long time. I wait for almos one hour after I stop it.

Then the OSD appears but down and as outdated

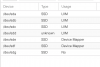

Proxmox Version 6,2,11

Ceph Version 14.2.11

Ceph OSD log

create OSD on /dev/sde (bluestore)

wipe disk/partition: /dev/sde

200+0 records in

200+0 records out

209715200 bytes (210 MB, 200 MiB) copied, 0.603464 s, 348 MB/s

Running command: /bin/ceph-authtool --gen-print-key

Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 3efe28f5-c30d-475f-b104-a7cd9a76fd0e

Running command: /sbin/vgcreate --force --yes ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6 /dev/sde

stdout: Physical volume "/dev/sde" successfully created.

stdout: Volume group "ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6" successfully created

Running command: /sbin/lvcreate --yes -l 953861 -n osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6

stdout: Logical volume "osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e" created.

Running command: /bin/ceph-authtool --gen-print-key

Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-9

--> Executable selinuxenabled not in PATH: /sbin:/bin:/usr/sbin:/usr/bin

Running command: /bin/chown -h ceph:ceph /dev/ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6/osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e

Running command: /bin/chown -R ceph:ceph /dev/dm-10

Running command: /bin/ln -s /dev/ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6/osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e /var/lib/ceph/osd/ceph-9/block

Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-9/activate.monmap

stderr: 2020-09-21 15:09:31.699 7f07da8cf700 -1 auth: unable to find a keyring on /etc/pve/priv/ceph.client.bootstrap-osd.keyring: (2) No such file or directory

2020-09-21 15:09:31.699 7f07da8cf700 -1 AuthRegistry(0x7f07d4081d88) no keyring found at /etc/pve/priv/ceph.client.bootstrap-osd.keyring, disabling cephx

stderr: got monmap epoch 3

Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-9/keyring --create-keyring --name osd.9 --add-key AQAKpmhf6J70FBAAjymgFRQxOQfcgZQJqCt60A==

stdout: creating /var/lib/ceph/osd/ceph-9/keyring

added entity osd.9 auth(key=

Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-9/keyring

Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-9/

Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 9 --monmap /var/lib/ceph/osd/ceph-9/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-9/ --osd-uuid 3efe28f5-c30d-475f-b104-a7cd9a76fd0e --setuser ceph --setgroup ceph

--> ceph-volume lvm prepare successful for: /dev/sde

TASK ERROR: command 'ceph-volume lvm create --cluster-fsid 2373833d-e7ca-4ddf-8af4-aeeb8c710949 --data /dev/sde' failed: received interrupt

Then the OSD appears but down and as outdated

Proxmox Version 6,2,11

Ceph Version 14.2.11

Ceph OSD log

create OSD on /dev/sde (bluestore)

wipe disk/partition: /dev/sde

200+0 records in

200+0 records out

209715200 bytes (210 MB, 200 MiB) copied, 0.603464 s, 348 MB/s

Running command: /bin/ceph-authtool --gen-print-key

Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 3efe28f5-c30d-475f-b104-a7cd9a76fd0e

Running command: /sbin/vgcreate --force --yes ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6 /dev/sde

stdout: Physical volume "/dev/sde" successfully created.

stdout: Volume group "ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6" successfully created

Running command: /sbin/lvcreate --yes -l 953861 -n osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6

stdout: Logical volume "osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e" created.

Running command: /bin/ceph-authtool --gen-print-key

Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-9

--> Executable selinuxenabled not in PATH: /sbin:/bin:/usr/sbin:/usr/bin

Running command: /bin/chown -h ceph:ceph /dev/ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6/osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e

Running command: /bin/chown -R ceph:ceph /dev/dm-10

Running command: /bin/ln -s /dev/ceph-da7ee397-fb11-4920-bd31-ee01aee9f4b6/osd-block-3efe28f5-c30d-475f-b104-a7cd9a76fd0e /var/lib/ceph/osd/ceph-9/block

Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-9/activate.monmap

stderr: 2020-09-21 15:09:31.699 7f07da8cf700 -1 auth: unable to find a keyring on /etc/pve/priv/ceph.client.bootstrap-osd.keyring: (2) No such file or directory

2020-09-21 15:09:31.699 7f07da8cf700 -1 AuthRegistry(0x7f07d4081d88) no keyring found at /etc/pve/priv/ceph.client.bootstrap-osd.keyring, disabling cephx

stderr: got monmap epoch 3

Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-9/keyring --create-keyring --name osd.9 --add-key AQAKpmhf6J70FBAAjymgFRQxOQfcgZQJqCt60A==

stdout: creating /var/lib/ceph/osd/ceph-9/keyring

added entity osd.9 auth(key=

Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-9/keyring

Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-9/

Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 9 --monmap /var/lib/ceph/osd/ceph-9/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-9/ --osd-uuid 3efe28f5-c30d-475f-b104-a7cd9a76fd0e --setuser ceph --setgroup ceph

--> ceph-volume lvm prepare successful for: /dev/sde

TASK ERROR: command 'ceph-volume lvm create --cluster-fsid 2373833d-e7ca-4ddf-8af4-aeeb8c710949 --data /dev/sde' failed: received interrupt