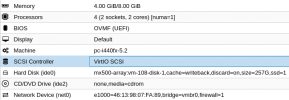

An idea has been percolating in my mind for some time now... My PVE hyperconverged CEPH cluster does not perform how I would like it to and I am considering non-hyperconverged cluster options for future growth (capacity). We have two "hadoop" high density SuperMicro HDD Nodes, purchasing a third would be trivial. I am considering something like this for each node:

Some additional questions might be:

- 12x2TB HDDs

- 1xPCIe to NVMe for Caching

- 2x 10GbE

- 64GB-128GB DDR3 of RAM

- Dual E5-2600 series V0 Intel Xeons

- 4x 500GB SATA SSDs

- 1x LSI 9800-i8

- 2x 10Gbps SFP+

- HPE Gen 8 Rackmount Servers

- Dual E5-2470 V2 OR E5-2690 V2 Intel Xeons

- Sufficient memory per node 48GB-160GB 1600MHz

Some additional questions might be:

- CEPH should automatically store data closest to where that data is being read and written to. I already know that the existing pool is performing outside of my expectations, would I see worse, similar, or better performance by joining three non-PVE nodes into the same pool?

- Would it make more sense to install PVE on those three SuperMicro servers?

- Is this all a fool's errand?

Last edited: