Hi,

i did what I did many times before. Added a node to existing cluster. After adding it, whole cluster went down (VMs were running but PVE stopped).

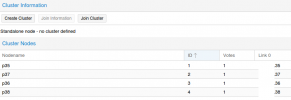

Here is how it looked on one node:

HTTP GUI was not working on old cluster nodes.

I sshed back to the updated nevly added node.

I did pvecm status and saw expected old cluster name, but with only 1 node.

I decided to shut down the newly added node and old cluster nodes started responding.

VMs did not suffer as they would, if I have had HA enabled.

Anyway, i wonder how should I go about debugging this, not to cause problems with my production cluster?

i did what I did many times before. Added a node to existing cluster. After adding it, whole cluster went down (VMs were running but PVE stopped).

Here is how it looked on one node:

Code:

[Fri Jan 29 14:46:36 2021] INFO: task pvesr:42198 blocked for more than 120 seconds.

[Fri Jan 29 14:46:36 2021] Tainted: P O 5.4.65-1-pve #1

[Fri Jan 29 14:46:36 2021] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Fri Jan 29 14:46:36 2021] pvesr D 0 42198 42181 0x00000000

[Fri Jan 29 14:46:36 2021] Call Trace:

[Fri Jan 29 14:46:36 2021] __schedule+0x2e6/0x6f0

[Fri Jan 29 14:46:36 2021] ? filename_parentat.isra.57.part.58+0xf7/0x180

[Fri Jan 29 14:46:36 2021] schedule+0x33/0xa0

[Fri Jan 29 14:46:36 2021] rwsem_down_write_slowpath+0x2ed/0x4a0

[Fri Jan 29 14:46:36 2021] down_write+0x3d/0x40

[Fri Jan 29 14:46:36 2021] filename_create+0x8e/0x180

[Fri Jan 29 14:46:36 2021] do_mkdirat+0x59/0x110

[Fri Jan 29 14:46:36 2021] __x64_sys_mkdir+0x1b/0x20

[Fri Jan 29 14:46:36 2021] do_syscall_64+0x57/0x190

[Fri Jan 29 14:46:36 2021] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[Fri Jan 29 14:46:36 2021] RIP: 0033:0x7f6f1cae10d7

[Fri Jan 29 14:46:36 2021] Code: Bad RIP value.

[Fri Jan 29 14:46:36 2021] RSP: 002b:00007ffd3fde42e8 EFLAGS: 00000246 ORIG_RAX: 0000000000000053

[Fri Jan 29 14:46:36 2021] RAX: ffffffffffffffda RBX: 000055cf08ca3260 RCX: 00007f6f1cae10d7

[Fri Jan 29 14:46:36 2021] RDX: 000055cf07d073d4 RSI: 00000000000001ff RDI: 000055cf0ce5bde0

[Fri Jan 29 14:46:36 2021] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000004

[Fri Jan 29 14:46:36 2021] R10: 0000000000000000 R11: 0000000000000246 R12: 000055cf0a10b7f8

[Fri Jan 29 14:46:36 2021] R13: 000055cf0ce5bde0 R14: 000055cf0ca0bc80 R15: 00000000000001ff

[Fri Jan 29 14:46:36 2021] INFO: task pvesr:42931 blocked for more than 120 seconds.

[Fri Jan 29 14:46:36 2021] Tainted: P O 5.4.65-1-pve #1

[Fri Jan 29 14:46:36 2021] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Fri Jan 29 14:46:36 2021] pvesr D 0 42931 1 0x00000000

[Fri Jan 29 14:46:36 2021] Call Trace:

[Fri Jan 29 14:46:36 2021] __schedule+0x2e6/0x6f0

[Fri Jan 29 14:46:36 2021] ? filename_parentat.isra.57.part.58+0xf7/0x180

[Fri Jan 29 14:46:36 2021] schedule+0x33/0xa0

[Fri Jan 29 14:46:36 2021] rwsem_down_write_slowpath+0x2ed/0x4a0

[Fri Jan 29 14:46:36 2021] down_write+0x3d/0x40

[Fri Jan 29 14:46:36 2021] filename_create+0x8e/0x180

[Fri Jan 29 14:46:36 2021] do_mkdirat+0x59/0x110

[Fri Jan 29 14:46:36 2021] __x64_sys_mkdir+0x1b/0x20

[Fri Jan 29 14:46:36 2021] do_syscall_64+0x57/0x190

[Fri Jan 29 14:46:36 2021] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[Fri Jan 29 14:46:36 2021] RIP: 0033:0x7f0942b020d7

[Fri Jan 29 14:46:36 2021] Code: Bad RIP value.

[Fri Jan 29 14:46:36 2021] RSP: 002b:00007ffeea576748 EFLAGS: 00000246 ORIG_RAX: 0000000000000053

[Fri Jan 29 14:46:36 2021] RAX: ffffffffffffffda RBX: 0000557237cd3260 RCX: 00007f0942b020d7

[Fri Jan 29 14:46:36 2021] RDX: 0000557236ca83d4 RSI: 00000000000001ff RDI: 000055723bdb3520

[Fri Jan 29 14:46:36 2021] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000004

[Fri Jan 29 14:46:36 2021] R10: 0000000000000000 R11: 0000000000000246 R12: 000055723913acc8

[Fri Jan 29 14:46:36 2021] R13: 000055723bdb3520 R14: 000055723ba38958 R15: 00000000000001ffHTTP GUI was not working on old cluster nodes.

I sshed back to the updated nevly added node.

I did pvecm status and saw expected old cluster name, but with only 1 node.

I decided to shut down the newly added node and old cluster nodes started responding.

VMs did not suffer as they would, if I have had HA enabled.

Anyway, i wonder how should I go about debugging this, not to cause problems with my production cluster?