So I've been having an "ongoing" issue with PBS. I'm not virtualizing PBS within PVE, it is a separate machine.

Backups (especially of large VMs') usually fail with the error ERROR: backup write data failed: command error: protocol canceled

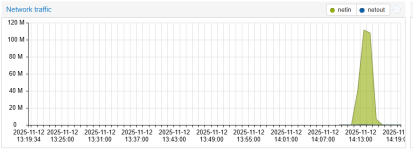

Smaller vm's I can retry and it will eventually work but the larger ones never succeed. I though it might be network related but even during the backup I can run iperf3 and get near gig speeds which is the link speed.

As you can see this vm is 340 GB and doesn't make it very far. most google results of this error point to something network related but I havent made any network changes from default on PVE or PBS and I have no other network issues. I've kind of run out of ideas.

The logs on PBS dont say much.

EDIT:

I should add the backup disk seems fine.

Backups (especially of large VMs') usually fail with the error ERROR: backup write data failed: command error: protocol canceled

Smaller vm's I can retry and it will eventually work but the larger ones never succeed. I though it might be network related but even during the backup I can run iperf3 and get near gig speeds which is the link speed.

Code:

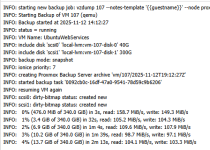

INFO: starting new backup job: vzdump 107 --notes-template '{{guestname}}' --all 0 --node proxmox --mode snapshot --mailnotification always --storage PBS

INFO: Starting Backup of VM 107 (qemu)

INFO: Backup started at 2025-11-11 15:39:18

INFO: status = stopped

INFO: backup mode: stop

INFO: ionice priority: 7

INFO: VM Name: UbuntuWebServices

INFO: include disk 'scsi0' 'local-lvm:vm-107-disk-0' 40G

INFO: include disk 'scsi1' 'local-lvm:vm-107-disk-1' 300G

INFO: creating Proxmox Backup Server archive 'vm/107/2025-11-11T20:39:18Z'

INFO: starting kvm to execute backup task

INFO: started backup task '97ea9034-67bc-41b6-85e3-ba3c81cdc1fc'

INFO: scsi0: dirty-bitmap status: created new

INFO: scsi1: dirty-bitmap status: created new

INFO: 0% (344.0 MiB of 340.0 GiB) in 3s, read: 114.7 MiB/s, write: 105.3 MiB/s

INFO: 1% (3.5 GiB of 340.0 GiB) in 34s, read: 104.5 MiB/s, write: 103.6 MiB/s

INFO: 1% (6.3 GiB of 340.0 GiB) in 16m 39s, read: 3.0 MiB/s, write: 3.0 MiB/s

ERROR: backup write data failed: command error: protocol canceled

INFO: aborting backup job

INFO: stopping kvm after backup task

ERROR: Backup of VM 107 failed - backup write data failed: command error: protocol canceled

INFO: Failed at 2025-11-11 15:55:59

INFO: Backup job finished with errors

INFO: notified via target `mail-to-root`

TASK ERROR: job errorsAs you can see this vm is 340 GB and doesn't make it very far. most google results of this error point to something network related but I havent made any network changes from default on PVE or PBS and I have no other network issues. I've kind of run out of ideas.

The logs on PBS dont say much.

Code:

Nov 11 15:56:43 pbs proxmox-backup-proxy[760]: backup failed: connection error: connection reset

Nov 11 15:56:43 pbs proxmox-backup-proxy[760]: removing failed backupEDIT:

I should add the backup disk seems fine.

Code:

root@pbs:~# hdparm -Tt /dev/sdb

/dev/sdb:

Timing cached reads: 11842 MB in 1.99 seconds = 5941.73 MB/sec

Timing buffered disk reads: 542 MB in 3.00 seconds = 180.61 MB/sec

Last edited: