How would I go about doing this? I'm new to this btw.

My usecase is this: Have multiple VMs use bond0 for fault tolerance and load balancing (if 1 VM saturates 1 GbE line, the other VMs will automagically use the other bond0 slaves. And use the onboard eth exclusively for management.

Question 1: Would this setup be ideal? Or is there a better way? Like dedicated GbE port per VM?

Question 2: Do I need to add bond0 to vmbr0's Ports/Slaves? or Create vmbr1 with bond0 as the slave? A bit confused as to how bond0 would get the IP.

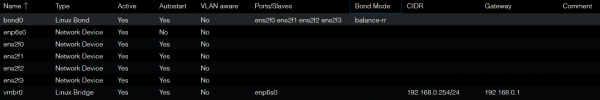

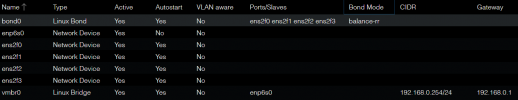

This is my current setup:

ens2f* are the 4 port intel GbE card, enp6s0 is the onboard (Management) port. All LAN ports go to 1 Switch that goes to the router.

Thanks in advance!

My usecase is this: Have multiple VMs use bond0 for fault tolerance and load balancing (if 1 VM saturates 1 GbE line, the other VMs will automagically use the other bond0 slaves. And use the onboard eth exclusively for management.

Question 1: Would this setup be ideal? Or is there a better way? Like dedicated GbE port per VM?

Question 2: Do I need to add bond0 to vmbr0's Ports/Slaves? or Create vmbr1 with bond0 as the slave? A bit confused as to how bond0 would get the IP.

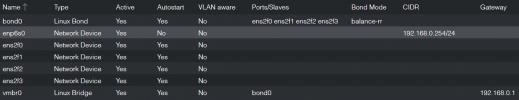

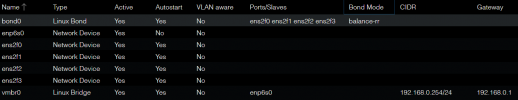

This is my current setup:

ens2f* are the 4 port intel GbE card, enp6s0 is the onboard (Management) port. All LAN ports go to 1 Switch that goes to the router.

Thanks in advance!