Good time!

Previously, I worked with proxmox virtualization, but there was no need to assemble a cluster, and now it's time for clustering.

In particular, I was interested in a convenient and flexible network virtualization solution. The standard configuration by means of a Linux system seems to me cumbersome and not convenient for scaling.

The task was to be able to easily add an indefinite number of VLAN's and assign VLAN tags to virtual machines with the ability to change. The optimal solution, in my opinion, is to replace the standard Linux network configuration with an ovs switch.

Therefore, after studying a certain amount of information on the proxmox wiki and in the Internet, I came to the following network configuration solution:

Also in this case, an aggregated channel is used via LACP.

For configuration trunk all you need to change "trunks=vlan_tags" to "trunks=all" or do not specify the trunk key at all.

As a result, we have only one network bridge to which all VM will be connected, and we can define the proxmox control interface to any convenient subnet.

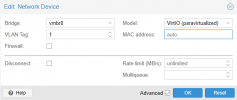

The creation of a VM network interface will look like this:

To define the subnet of the VM, you just need to specify the tag. And to add a new subnet to the proxmox, specify the vlan tag in the network configuration.

I also add a sequence of actions to configure the system after installation.

Be sure to check the network configuration after reboot and before connecting the node to the cluster.

Previously, I worked with proxmox virtualization, but there was no need to assemble a cluster, and now it's time for clustering.

In particular, I was interested in a convenient and flexible network virtualization solution. The standard configuration by means of a Linux system seems to me cumbersome and not convenient for scaling.

The task was to be able to easily add an indefinite number of VLAN's and assign VLAN tags to virtual machines with the ability to change. The optimal solution, in my opinion, is to replace the standard Linux network configuration with an ovs switch.

Therefore, after studying a certain amount of information on the proxmox wiki and in the Internet, I came to the following network configuration solution:

auto lo

iface lo inet loopback

allow-vmbr0 ens17f0

auto ens17f0

iface ens17f0 inet manual

mtu 9214

allow-vmbr0 ens17f1

auto ens17f1

iface ens17f1 inet manual

mtu 9214

auto mgmt1

iface mgmt1 inet static

address 192.168.10.205/20

gateway 192.168.10.1

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 1500

ovs_options tag=1

ovs_extra set interface ${IFACE} external-ids:iface-id=$(hostname -s)-${IFACE}-vif

auto bond0

iface bond0 inet manual

ovs_bonds ens17f0 ens17f1

ovs_type OVSBond

ovs_bridge vmbr0

ovs_mtu 9214

ovs_options lacp=active trunks=1,10,50 vlan_mode=native-untagged bond_mode=balance-tcp tag=1 other_config:lacp-time=fast

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 mgmt1

ovs_mtu 9214

Also in this case, an aggregated channel is used via LACP.

For configuration trunk all you need to change "trunks=vlan_tags" to "trunks=all" or do not specify the trunk key at all.

As a result, we have only one network bridge to which all VM will be connected, and we can define the proxmox control interface to any convenient subnet.

The creation of a VM network interface will look like this:

To define the subnet of the VM, you just need to specify the tag. And to add a new subnet to the proxmox, specify the vlan tag in the network configuration.

I also add a sequence of actions to configure the system after installation.

Be sure to check the network configuration after reboot and before connecting the node to the cluster.

### this script is designed to work with the installation image proxmoxVE_6.3 on debian10 system

### remove enterprise repository

rm /etc/apt/sources.list.d/pve-enterprise.list

### add installation repositoryes !!!! only for install PVE !!!!

touch /etc/apt/sources.list.d/pve-install-repo.list

echo "deb http://download.proxmox.com/debian/pve stretch pve-no-subscription" > /etc/apt/sources.list.d/pve-install-repo.list

echo '

# Default debian repository

deb http://deb.debian.org/debian/ stretch main

deb-src http://deb.debian.org/debian/ stretch main

deb http://deb.debian.org/debian/ stretch-updates main

deb-src http://deb.debian.org/debian/ stretch-updates main

deb http://security.debian.org/debian-security/ stretch/updates main

deb-src http://security.debian.org/debian-security/ stretch/updates main

# Security debian updates

deb http://security.debian.org/debian-security buster/updates main contrib

# PVE no subscription repository provided by proxmox.com

deb http://download.proxmox.com/debian/pve buster pve-no-subscription' > /etc/apt/sources.list

### add additionaly repository for ceph !!!! only for install CEPH !!!!

touch /etc/apt/sources.list.d/ceph.list

echo "deb http://download.proxmox.com/debian/ceph-nautilus buster main" > /etc/apt/sources.list.d/ceph.list

apt update

apt dist-upgrade

apt install net-tools

apt install ethtool

apt install openvswitch-switch

apt install ifenslave-2.6

apt install ifupdown2

nano /etc/sysctl.conf

### next string should matter:

net.ipv4.ip_forward = 1

### check

sysctl -p

### Include all nodes in cluster

/etc/hosts

### Targets server addr hostname.domain hostname

127.0.0.1 localhost.localdomain localhost

192.168.10.201 hv01.your.company.domine hv01

### Other nodes in cluster

192.168.10.202 hv02.your.company.domine hv02

192.168.10.203 hv03.your.company.domine hv03

192.168.10.204 hv04.your.company.domine hv04

192.168.10.205 hv05.your.company.domine hv05

192.168.10.206 hv06.your.company.domine hv06

192.168.10.207 hv07.your.company.domine hv07

192.168.10.208 hv08.your.company.domine hv08

### Network configuration witch use OVS switch: SFP+ bound, lacp=active, trunk-native-untagged + used trunks

### change your network settings

##############################################################################################################################

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

iface ens11f0 inet manual

iface ens11f1 inet manual

allow-vmbr0 ens17f0

auto ens17f0

iface ens17f0 inet manual

mtu 9214

allow-vmbr0 ens17f1

auto ens17f1

iface ens17f1 inet manual

mtu 9214

auto mgmt1

iface mgmt1 inet static

address 192.168.10.205/20

gateway 192.168.10.1

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 1500

ovs_options tag=1

ovs_extra set interface ${IFACE} external-ids:iface-id=$(hostname -s)-${IFACE}-vif

auto bond0

iface bond0 inet manual

ovs_bonds ens17f0 ens17f1

ovs_type OVSBond

ovs_bridge vmbr0

ovs_mtu 9214

ovs_options lacp=active trunks=1,10,50 vlan_mode=native-untagged bond_mode=balance-tcp tag=1 other_config:lacp-time=fast

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 mgmt1

ovs_mtu 9214

####################################################################################################################################

### Cluster quorum tunes

### Witness Server utils

apt install corosync-qdevice

apt install corosync-qnetd

### Other nodes utils

apt install corosync-qdevice

### VM agent for linux systems

############

apt install qemu-guest-agent

Last edited: