That's a good point. NVMe seems to be using NUMA by default.

Here is with round-robin:

So overall, worse with round-robbin. Only thing that;s a tiny tiny bit better is the write IOPS.

I'll see if there's some simple tuning that needs to be done on PVE.

Code:

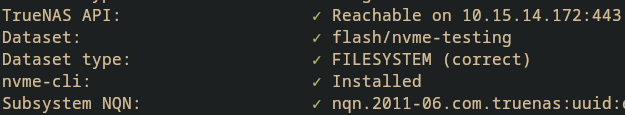

FIO Storage Benchmark

Running benchmark on storage: tn-nvme

FIO installation: ✓ fio-3.39

Storage configuration: ✓ Valid (nvme-tcp mode)

Finding available VM ID: ✓ Using VM ID 990

Allocating 10GB test volume: ✓ tn-nvme:vol-fio-bench-1762630899-nsf29e7fb7-dea1-44d3-bae8-bed0a2dd9244

Waiting for device (5s): ✓ Ready

Detecting device path: ✓ /dev/nvme3n24

Validating device is unused: ✓ Device is safe to test

Starting FIO benchmarks (30 tests, 25-30 minutes total)...

Transport mode: nvme-tcp (testing QD=1, 16, 32, 64, 128)

Sequential Read Bandwidth Tests: [1-5/30]

Queue Depth = 1: ✓ 761.42 MB/s

Queue Depth = 16: ✓ 3.48 GB/s

Queue Depth = 32: ✓ 4.13 GB/s

Queue Depth = 64: ✓ 4.34 GB/s

Queue Depth = 128: ✓ 4.07 GB/s

Sequential Write Bandwidth Tests: [6-10/30]

Queue Depth = 1: ✓ 507.10 MB/s

Queue Depth = 16: ✓ 397.86 MB/s

Queue Depth = 32: ✓ 380.13 MB/s

Queue Depth = 64: ✓ 381.88 MB/s

Queue Depth = 128: ✓ 375.21 MB/s

Random Read IOPS Tests: [11-15/30]

Queue Depth = 1: ✓ 6,095 IOPS

Queue Depth = 16: ✓ 63,947 IOPS

Queue Depth = 32: ✓ 67,264 IOPS

Queue Depth = 64: ✓ 68,497 IOPS

Queue Depth = 128: ✓ 67,753 IOPS

Random Write IOPS Tests: [16-20/30]

Queue Depth = 1: ✓ 3,808 IOPS

Queue Depth = 16: ✓ 3,730 IOPS

Queue Depth = 32: ✓ 3,571 IOPS

Queue Depth = 64: ✓ 3,527 IOPS

Queue Depth = 128: ✓ 3,549 IOPS

Random Read Latency Tests: [21-25/30]

Queue Depth = 1: ✓ 135.18 µs

Queue Depth = 16: ✓ 249.72 µs

Queue Depth = 32: ✓ 479.23 µs

Queue Depth = 64: ✓ 954.86 µs

Queue Depth = 128: ✓ 1.87 ms

Mixed 70/30 Workload Tests: [26-30/30]

Queue Depth = 1: ✓ R: 4,204 / W: 1,799 IOPS

Queue Depth = 16: ✓ R: 9,917 / W: 4,260 IOPS

Queue Depth = 32: ✓ R: 8,228 / W: 3,531 IOPS

Queue Depth = 64: ✓ R: 8,300 / W: 3,562 IOPS

Queue Depth = 128: ✓ R: 8,022 / W: 3,444 IOPS

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Benchmark Summary

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Total tests run: 30

Completed: 30

Top Performers:

Sequential Read: 4.34 GB/s (QD=64 )

Sequential Write: 507.10 MB/s (QD=1 )

Random Read IOPS: 68,497 IOPS (QD=64 )

Random Write IOPS: 3,808 IOPS (QD=1 )

Lowest Latency: 135.18 µs (QD=1 )

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━Here is with round-robin:

Code:

FIO Storage Benchmark

Running benchmark on storage: tn-nvme

FIO installation: ✓ fio-3.39

Storage configuration: ✓ Valid (nvme-tcp mode)

Finding available VM ID: ✓ Using VM ID 990

Allocating 10GB test volume: ✓ tn-nvme:vol-fio-bench-1762631789-ns6edcf3c3-724c-4c25-b12b-6b35d5543f1a

Waiting for device (5s): ✓ Ready

Detecting device path: ✓ /dev/nvme3n25

Validating device is unused: ✓ Device is safe to test

Starting FIO benchmarks (30 tests, 25-30 minutes total)...

Transport mode: nvme-tcp (testing QD=1, 16, 32, 64, 128)

Sequential Read Bandwidth Tests: [1-5/30]

Queue Depth = 1: ✓ 528.17 MB/s

Queue Depth = 16: ✓ 2.88 GB/s

Queue Depth = 32: ✓ 1.86 GB/s

Queue Depth = 64: ✓ 2.08 GB/s

Queue Depth = 128: ✓ 2.73 GB/s

Sequential Write Bandwidth Tests: [6-10/30]

Queue Depth = 1: ✓ 360.35 MB/s

Queue Depth = 16: ✓ 340.29 MB/s

Queue Depth = 32: ✓ 377.58 MB/s

Queue Depth = 64: ✓ 378.55 MB/s

Queue Depth = 128: ✓ 352.34 MB/s

Random Read IOPS Tests: [11-15/30]

Queue Depth = 1: ✓ 5,613 IOPS

Queue Depth = 16: ✓ 64,160 IOPS

Queue Depth = 32: ✓ 66,288 IOPS

Queue Depth = 64: ✓ 66,266 IOPS

Queue Depth = 128: ✓ 68,716 IOPS

Random Write IOPS Tests: [16-20/30]

Queue Depth = 1: ✓ 2,738 IOPS

Queue Depth = 16: ✓ 4,429 IOPS

Queue Depth = 32: ✓ 4,448 IOPS

Queue Depth = 64: ✓ 2,855 IOPS

Queue Depth = 128: ✓ 2,804 IOPS

Random Read Latency Tests: [21-25/30]

Queue Depth = 1: ✓ 187.71 µs

Queue Depth = 16: ✓ 251.39 µs

Queue Depth = 32: ✓ 477.93 µs

Queue Depth = 64: ✓ 977.68 µs

Queue Depth = 128: ✓ 1.88 ms

Mixed 70/30 Workload Tests: [26-30/30]

Queue Depth = 1: ✓ R: 3,228 / W: 1,380 IOPS

Queue Depth = 16: ✓ R: 11,856 / W: 5,097 IOPS

Queue Depth = 32: ✓ R: 10,289 / W: 4,417 IOPS

Queue Depth = 64: ✓ R: 10,191 / W: 4,377 IOPS

Queue Depth = 128: ✓ R: 10,558 / W: 4,534 IOPS

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Benchmark Summary

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Total tests run: 30

Completed: 30

Top Performers:

Sequential Read: 2.88 GB/s (QD=16 )

Sequential Write: 378.55 MB/s (QD=64 )

Random Read IOPS: 68,716 IOPS (QD=128)

Random Write IOPS: 4,448 IOPS (QD=32 )

Lowest Latency: 187.71 µs (QD=1 )So overall, worse with round-robbin. Only thing that;s a tiny tiny bit better is the write IOPS.

I'll see if there's some simple tuning that needs to be done on PVE.