Thanks for reading. I'm a week newbie to Proxmox. I had a good setup (i.e. things were working exactly as expected) and I was playing with various Proxmox assets when my NAS (openmediavault) VM suddenly stopped working on July 2, 2022. It was set up with my LSI HBA in a passthrough to the OS. There were lots of installation and updating things going on with various VMs and CTs so I have no idea what set it off. I attempted a bunch of troubleshooting, but no luck. The BIOS appears to Post and the LSI HBA BIOS runs and finds all the drives, but then it halts immediately when it attempts to "boot from Hard Disk" with the PCIe device in passthrough. If I remove the PCI device from the hardware list, the VM boots perfectly. The "halt" in processing is accompanied by memory usage running to maximum and CPU core to max usage. I suspect a bug with a runaway process sucking up all the RAM but I have no idea how to troubleshoot it.

I created a VM with a bare-bones Debian install and got it running without the PCIe passthrough, then attempted to add the device. That also caused the exact same behavior. The BIOS says that it's booting, but then the GRUB message does not appear.

I decided that I must've blown something up while attempting to get some recalcitrant PCI NICs to do PCI passthrough, so I reinstalled proxmox from scratch and it's behaving exactly the same now as right before the re-install (i.e. broken).

Any suggestions on how to do additional debug or what to fix would be very helpful, please. No hardware changes at all. Some BIOS changes were going on, but I don't think that there were any between when it was working and when it stopped working.

Other probably irrelevant details

• I've tried installing the VM both with and without the HBA at initial boot. It will boot the first time with the HBA, and do the install, but on the first attempt to boot into grub the Memory usage shoots up to almost 100% a couple of seconds after starting and one of the CPUs get maxed out (25% CPU usage with 4 cores).

• VM kern.log has no entries for the stalled boots.

• I've tried a lot of different combinations of things differently in the hardware and Options list and the only one that makes a difference is removing the HBA controller. (i.e. adding or removing the second GBe device to/from the VM makes no difference - VM boots when the HBA isn't in and doesn't boot when the HBA is out).

• I have a hunch that some recent update has broken the functionality, but I can't figure out where

I created a VM with a bare-bones Debian install and got it running without the PCIe passthrough, then attempted to add the device. That also caused the exact same behavior. The BIOS says that it's booting, but then the GRUB message does not appear.

I decided that I must've blown something up while attempting to get some recalcitrant PCI NICs to do PCI passthrough, so I reinstalled proxmox from scratch and it's behaving exactly the same now as right before the re-install (i.e. broken).

Any suggestions on how to do additional debug or what to fix would be very helpful, please. No hardware changes at all. Some BIOS changes were going on, but I don't think that there were any between when it was working and when it stopped working.

Other probably irrelevant details

• I've tried installing the VM both with and without the HBA at initial boot. It will boot the first time with the HBA, and do the install, but on the first attempt to boot into grub the Memory usage shoots up to almost 100% a couple of seconds after starting and one of the CPUs get maxed out (25% CPU usage with 4 cores).

• VM kern.log has no entries for the stalled boots.

• I've tried a lot of different combinations of things differently in the hardware and Options list and the only one that makes a difference is removing the HBA controller. (i.e. adding or removing the second GBe device to/from the VM makes no difference - VM boots when the HBA isn't in and doesn't boot when the HBA is out).

• I have a hunch that some recent update has broken the functionality, but I can't figure out where

Code:

# dmesg | grep -e DMAR -e IOMMU

[ 0.000000] ACPI: DMAR 0x00000000BF75E0D0 000128 (v01 AMI OEMDMAR 00000001 MSFT 00000097)

[ 0.000000] ACPI: Reserving DMAR table memory at [mem 0xbf75e0d0-0xbf75e1f7]

[ 0.000000] DMAR: IOMMU enabled

Code:

cat /etc/default/grub

# If you change this file, run 'update-grub' afterwards to update

…

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

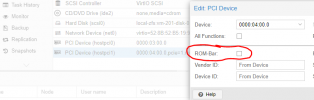

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt transparent_hugepage=always pcie_port_pm=off nofb vfio-pci.ids=1000:0064"

GRUB_CMDLINE_LINUX=""

Code:

# find /sys/kernel/iommu_groups/ -type l|grep 37

/sys/kernel/iommu_groups/37/devices/0000:04:00.0

Code:

04:00.0 Serial Attached SCSI controller: Broadcom / LSI SAS2116 PCI-Express Fusion-MPT SAS-2 [Meteor] (rev 02)

Subsystem: Broadcom / LSI SAS 9201-16i

Kernel driver in use: vfio-pci

Kernel modules: mpt3sas

Code:

# uname -r

5.15.30-2-pve

Last edited: