I just tested on my gen8's and I had forgot that amsd is for gen10 only. One need to install hp-ams instead. I tested it now and it works nicely.

So the steps would then be to do something like this:

You could probably just download the deb and install but if there are dependencies do follow through. There's a bunch of other "nice to have" tools installable from HPEs repo.

Install gnupg (most probably already installed)

Code:

apt-get update && apt-get install gnupg

Install the key. Regarding the deprecated bits you mentioned. It's not the keys that are deprecated it's the tool apt-key itself. You should start getting used to a different way now since that tool won't be around in the next Debian. Dunno in what Ubuntu version it will be gone permanently.

Install the needed key using gpg:

Code:

wget -O- https://downloads.linux.hpe.com/SDR/hpePublicKey2048_key1.pub | gpg --dearmor > /usr/share/keyrings/hpePublicKey2048-archive-keyring.gpg

The --dearmor bit is used since these keys are using ascii armor. You can check that with

file command. If the output has something with "(old)" in it it's ascii armored. It's good practice to suffix the archive keyrings with "archive-keyring".

Now create an apt source file in

/etc/apt/sources.list.d

Code:

echo "deb [signed-by=/usr/share/keyrings/hpePublicKey2048-archive-keyring.gpg] http://downloads.linux.hpe.com/SDR/repo/mcp bullseye/current non-free" > /etc/apt/sources.list.d/hpe.list

Note the "signed-by" part that now points to the public key we just downloaded. Note that this is Proxmox 7 which is bullseye. If on 6 it's buster i think? Correct me if i'm wrong. But anyways, modify to your needs.

Now install what you need, I always grab these, skip if not needed

But at least do an apt-get update.

Code:

apt-get update && apt-get install ssa ssacli ssaducli storcli

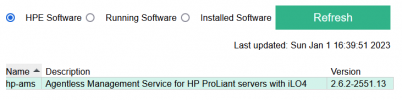

And here's Agentless Management Service for gen9 and below

Code:

cd

wget https://downloads.linux.hpe.com/SDR/repo/mcp/debian/pool/non-free/hp-ams_2.6.2-2551.13_amd64.deb

dpkg -i hp-ams_2.6.2-2551.13_amd64.deb

Remove deb when it's installed if you don't want to keep it

Rich (BB code):

rm hp-ams_2.6.2-2551.13_amd64.deb

Gen10 and above

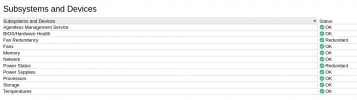

Now watch as your Agentless Management Service indicator goes green

View attachment 35870

View attachment 35870

Hurray...

Cheers

Marcus