Hi everyone,

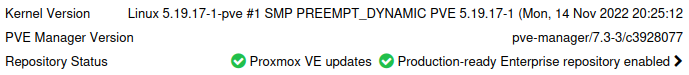

we are experiencing a strange problem; since the update, a couple of months ago, to:

occasionally we find some machine with 100% CPU and completely unusable.

We found that just by live-migrating th VM to another node it starts working again, without reset.

Has anyone had similar problems?

Matteo

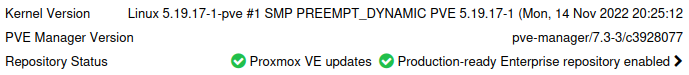

we are experiencing a strange problem; since the update, a couple of months ago, to:

occasionally we find some machine with 100% CPU and completely unusable.

We found that just by live-migrating th VM to another node it starts working again, without reset.

Has anyone had similar problems?

Matteo