Hi all,

Currently, I have the following setup:

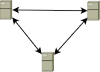

I run a pve cluster with 3 nodes. Each node has two gigabit network interfaces one with a public management IP and one on an internal switch with a private IP.

Now, since the public IPs are not on the same network, I created the cluster using the private IPs. The internal network is also used to mount NFS shares.

Thus far everything works really well.

Now, what I would like to know is if it makes sense to setup ceph on the three nodes:

Thanks a lot,

Martin

Currently, I have the following setup:

I run a pve cluster with 3 nodes. Each node has two gigabit network interfaces one with a public management IP and one on an internal switch with a private IP.

Now, since the public IPs are not on the same network, I created the cluster using the private IPs. The internal network is also used to mount NFS shares.

Thus far everything works really well.

Now, what I would like to know is if it makes sense to setup ceph on the three nodes:

- Given the fact that it is 'only' gigabit ethernet, what will performance be like?

- Even if it is (which I anticipate) too slow for running VM images, would it be suitable for backup purposes?

- Do I need a separate (e.g. VLAN) network on top of the existing private LAN?

- Are there any other caveats I need to watch out for?

Thanks a lot,

Martin

Last edited: