Hi,

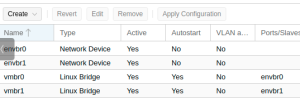

I just updated to the latest version of PVE and on restart my server was not available of all networks.

I took me a bit to figure it out but i found out that the latest update renamed some of my interfaces. The problem is limited to only one network card but it was quite troublesome.

I don't know if this behaviour is expected and it's the first time that i'm encountering something like that.

I'm linking you a screenshot.

If you need more infos, don't hesitate to ask me

I just updated to the latest version of PVE and on restart my server was not available of all networks.

I took me a bit to figure it out but i found out that the latest update renamed some of my interfaces. The problem is limited to only one network card but it was quite troublesome.

I don't know if this behaviour is expected and it's the first time that i'm encountering something like that.

I'm linking you a screenshot.

If you need more infos, don't hesitate to ask me