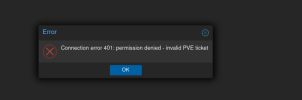

I am running proxmox cluster having 3 nodes and unable to login to GUI since yesterday, however SSH is working fine on all nodes and few VMs.

I did troubleshooting by searching over internet but still unable to access it. Now some of the VMs were inaccessible which are on main node. Please can someone guide to resolve this issue.

Tried restarting pvedaemon and pveproxy

other troubleshooting steps:

Code:

root@PvE01Ser05:~# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 PvE01Ser05 (local)

2 1 PvE02Ser06

3 1 PvE03Ser13

-------------------

Name: MNeTPvECL01

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Mar 25 20:14:41 2024

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000001

Ring ID: 1.1075

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.5.15 (local)

0x00000002 1 192.168.5.25

0x00000003 1 192.168.5.35

root@PvE01Ser05:~#

I did troubleshooting by searching over internet but still unable to access it. Now some of the VMs were inaccessible which are on main node. Please can someone guide to resolve this issue.

Code:

root@PvE01Ser05:~# wget --no-check-certificate https://localhost:8006

--2024-03-25 16:07:53-- https://localhost:8006/

Resolving localhost (localhost)... 127.0.0.1

Connecting to localhost (localhost)|127.0.0.1|:8006... connected.

WARNING: The certificate of ‘localhost’ is not trusted.

WARNING: The certificate of ‘localhost’ doesn't have a known issuer.

HTTP request sent, awaiting response... 200 OK

Length: 2494 (2.4K) [text/html]

Saving to: ‘index.html.1’

index.html.1 100%[==============================================================================================================================================>] 2.44K --.-KB/s in 0s

2024-03-25 16:07:53 (121 MB/s) - ‘index.html.1’ saved [2494/2494]Tried restarting pvedaemon and pveproxy

Code:

systemctl restart pveproxy.service pvedaemon.service

Failed to restart pveproxy.service: Transaction for pveproxy.service/restart is destructive (dev-disk-by\x2did-dm\x2dname\x2dpve\x2dswap.swap has 'stop' job queued, but 'start' is included in transaction).

See system logs and 'systemctl status pveproxy.service' for details.

Failed to restart pvedaemon.service: Transaction for pvedaemon.service/restart is destructive (systemd-binfmt.service has 'stop' job queued, but 'start' is included in transaction).

See system logs and 'systemctl status pvedaemon.service' for details.other troubleshooting steps:

Code:

root@PvE01Ser05:~# pvedaemon.service

e - PVE API Daemon

Loaded: loaded (/lib/systemd/system/pvedaemon.service; enabled; preset: enabled)

Active: active (running) since Mon 2024-03-25 11:42:04 IST; 48min ago

Process: 834 ExecStart=/usr/bin/pvedaemon start (code=exited, status=0/SUCCESS)

Main PID: 863 (pvedaemon)

Tasks: 4 (limit: 18969)

Memory: 152.3M

CPU: 1.260s

CGroup: /system.slice/pvedaemon.service

├─863 pvedaemon

├─864 "pvedaemon worker"

├─865 "pvedaemon worker"

└─866 "pvedaemon worker"

Mar 25 11:42:03 PvE01Ser05 systemd[1]: Starting pvedaemon.service - PVE API Daemon...

Mar 25 11:42:04 PvE01Ser05 pvedaemon[863]: starting server

Mar 25 11:42:04 PvE01Ser05 pvedaemon[863]: starting 3 worker(s)

Mar 25 11:42:04 PvE01Ser05 pvedaemon[863]: worker 864 started

Mar 25 11:42:04 PvE01Ser05 pvedaemon[863]: worker 865 started

Mar 25 11:42:04 PvE01Ser05 pvedaemon[863]: worker 866 started

Mar 25 11:42:04 PvE01Ser05 systemd[1]: Started pvedaemon.service - PVE API Daemon.

systemctl status pvestatd.service

○ pvestatd.service - PVE Status Daemon

Loaded: loaded (/lib/systemd/system/pvestatd.service; enabled; preset: enabled)

Active: inactive (dead) since Mon 2024-03-25 12:13:08 IST; 20min ago

Duration: 31min 2.738s

Process: 820 ExecStart=/usr/bin/pvestatd start (code=exited, status=0/SUCCESS)

Process: 4323 ExecStop=/usr/bin/pvestatd stop (code=exited, status=0/SUCCESS)

Main PID: 835 (code=exited, status=0/SUCCESS)

CPU: 1.571s

Mar 25 11:42:02 PvE01Ser05 systemd[1]: Starting pvestatd.service - PVE Status Daemon...

Mar 25 11:42:03 PvE01Ser05 pvestatd[835]: starting server

Mar 25 11:42:03 PvE01Ser05 systemd[1]: Started pvestatd.service - PVE Status Daemon.

Mar 25 12:13:06 PvE01Ser05 systemd[1]: Stopping pvestatd.service - PVE Status Daemon...

Mar 25 12:13:07 PvE01Ser05 pvestatd[835]: received signal TERM

Mar 25 12:13:07 PvE01Ser05 pvestatd[835]: server closing

Mar 25 12:13:07 PvE01Ser05 pvestatd[835]: server stopped

Mar 25 12:13:08 PvE01Ser05 systemd[1]: pvestatd.service: Deactivated successfully.

Mar 25 12:13:08 PvE01Ser05 systemd[1]: Stopped pvestatd.service - PVE Status Daemon.

Mar 25 12:13:08 PvE01Ser05 systemd[1]: pvestatd.service: Consumed 1.571s CPU time.

systemctl status pveproxy

× pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled; preset: enabled)

Active: failed (Result: timeout) since Mon 2024-03-25 12:12:10 IST; 24min ago

Process: 3523 ExecStartPre=/usr/bin/pvecm updatecerts --silent (code=killed, signal=KILL)

CPU: 318ms

Mar 25 12:10:40 PvE01Ser05 systemd[1]: pveproxy.service: Killing process 2877 (pvecm) with signal SIGKILL.

Mar 25 12:10:40 PvE01Ser05 systemd[1]: pveproxy.service: Killing process 3524 (pvecm) with signal SIGKILL.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Processes still around after final SIGKILL. Entering failed mode.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Failed with result 'timeout'.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Unit process 869 (pvecm) remains running after unit stopped.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Unit process 1576 (pvecm) remains running after unit stopped.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Unit process 2225 (pvecm) remains running after unit stopped.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Unit process 2877 (pvecm) remains running after unit stopped.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: pveproxy.service: Unit process 3524 (pvecm) remains running after unit stopped.

Mar 25 12:12:10 PvE01Ser05 systemd[1]: Stopped pveproxy.service - PVE API Proxy Server.